In this project we have developed three generations of biped robots able to walk and balance on two legs. The stereoscopic head developed in another project has been mounted on this robot.

The main problem when designing bipedal robots is the large amount of degrees of freedom available once several motors are used to simulate the human joints. In our project we developed special PIC circuits of small size that help us to keep the complexity of the task in check. The controllers used for the legs are fuzzy controllers based on a set of fuzzy rules. The robot is modular.

We have investigated gaits based on keeping the center of mass under the contact points of the robot. One way of achieving this is to use a pendulum to simulate the movement of the upper body. The gaits we obtained allow the robot to move forward, backwards, and turn around.

In the next stage of our project we are now improving the speed of the robot and are investigating different types of gaits, including the passive dynamic walking approach. It consists in moving forward the center of mass, accelerating the robot, and avoiding a fall by synchronizing the leg reflexes with the balancing movement.

We are developing a fully self-calibrated stereoscopic computer vision for the robots.

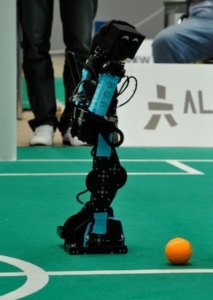

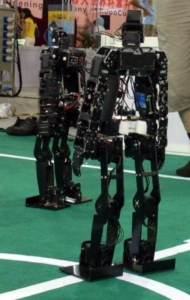

| Our humanoids in RoboCup 2007 (Atlanta) and in Hannover, German Open 2009. |

| Engineers of the future visit the university (Long Night of the Sciences 2009) |

Adaptive Vision for Mobile Robots

In our project we have developed new algorithms for a) fast and efficient segmentation of color images, b) real-time recognition of features on the field, and c) real time localization of the robots. Localization of the robot is done using Expectation Maximization, Kalman filtering, and Markov localization methods.

The colors recognized by the robots are not adjusted by human operators. They are learned by the robots. The robots initially self-localize on the field by using only black and white information (the white lines are still recognizable). From an initial localization, the robot formulates hypotheses about the colors of the regions enclosed by white lines and then computes its position again, possibly by displacing or rotating. Then new hypotheses are formulated, and so on. The whole color table can be filled in one or two seconds using this approach. The table can also be updated while playing.

| Initial bw segmentation (left) automatically learned color calibration (right) |

Neural Coach for Autonomous Robots

We have been programming autonomous mobile robots for robotic competitions. Up until now, the robots’ behavior was programmed directly in great detail. In this project we are investigating ways of controlling the robots with programs that can learn from experience. We want to teach the robots to make moves as if they were human players being instructed by a coach. We want to give them static examples of a play situation and we expect them to generalize to new, unseen, situations. The figure shows an example of the way a human coach would explain to a team of human players (playing five-a-side soccer) how to play to get around a barrier of opponents.

As can be seen from the diagram, the human coach uses an abstract form of communication, a sketch of the game situation, to illustrate the strategy needed to move two players forward. The human coach does not need to draw all possible variations of a barrier of opponents for the team members to understand the tactic: pass to the left, and then try to double-pass to the other player, who should have come forward. Using this approach, we do not have to program all game alternatives by hand. We can just compile a set of static examples of “good” and “bad” moves. We can then use machine learning to adapt the behavior software to the learned scenarios.

The static field situation depicted in the diagram has to be encoded as a set of features that can be processed by one or several neural networks. The network learns, for example, what a “good” passing situation is, and can also learn to show the robots the field positions with a high probability of reward. Using the output of such networks, the robots can anticipate game positions. They stop being purely reactive and become more forward-looking. It has been said that most of our own conscious processing goes into predicting the future, that is, formulating expectations. When we walk, and when we see, we predict our future position and even the position of our eyes. We know that we are moving in the world, the world is not moving around us. Predicting the best possible movements for the robots amounts to making a strategic evaluation of the alternatives the opponent has. This is exactly what we want to achieve.

The first version of the neural coach was used during RoboCup 2005. The FU-Fighters won first place at that competition.

Information dissemination

The FU-Fighters reach 25.000 Google hits in Internet, while the new robots, die FUmanoids, have reached the 7470 hits mark.

| The humanoid robots in the news (left), list of videos of games (FUmanoids, right) |