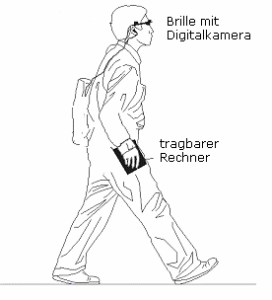

Imagine having reading glasses with a small video camera attached. The signal from the camera is sent to a small sub-notebook computer. The camera is able to rotate because it is attached to a spherical joint, so that it can capture images as if it had a wide-angle lens. Imagine a blind person using these glasses. He or she sits at home, holds a newspaper in front of his or her face, and using spoken commands, orders the computer to read the text aloud. The computer first assembles a single picture from the several images provided by the camera and detects the boundaries of the book. It then recognizes the headlines and reads them aloud using a software speech synthesizer. The blind person then orders the computer to read the first article, the third, and so on.

| The reading system for the blind |

Imagine the same blind person being able to walk around a building and order the computer to read the nameplates on doors. The camera actively looks for doors, captures the image, and proceeds to recognize the text written on them.

Imagine a blind person entering a restaurant and being able to read the menu, or the label on a bottle. Imagine a blind person able to ask the computer, with a spoken command, to look for windows or doors in a room. Imagine that the computer tells the blind person that it can detect five faces in a room, so that he or she knows how many people are there.

This is exactly what we want to achieve with our project. We want to build a portable reading system for the blind, which can also help in simple pattern recognition situations.

During 2005-2007 we conducted a project in which we developed the first version of Saccadic(i), our vision of intelligent reading glasses for the blind. We did the following:

- We applied piezoelectric elements to move the imaging chip of a video camera.

- We developed super resolution software based on the drizzling method, which improves the image resolution fourfold.

- We manually controlled the camera to take several images of a book or newspaper.

- We integrated a commercial stitcher in our system, to provide the images for the OCR.

- We wrote layout recognition software of our own using the OCR Fine Reader recognition engine library.

- We wrote a navigation interface for spoken commands.

- We integrated a commercial SpeechXML-based speech recognizer with the system.

- We packaged the complete software into a JVC laptop with 1 GHz and 1 Gigabyte of memory.

The system works and the first public demos have been made. The major shortcoming of the current system is that it is slow, stitching requiring several seconds, and it is not portable. The camera system is too heavy and the laptop is still too big. In our project we have to:

- Miniaturize the system.

- Speed it up to real time.

- Make it robust against changes in lighting.

- Improve the image recognition accuracy.

- Integrate more features.

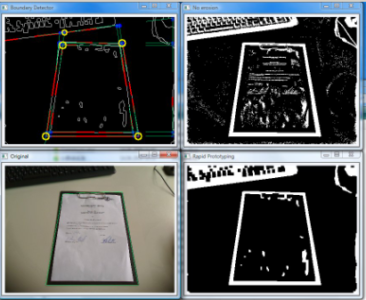

The diagram shows the steps followed in our current Saccadic(i) prototype. The video camera is used to take images and increase their resolution using drizzling. Several images of a book or newspaper, displaced, are taken. We use manual control. The stitching software automatically produces a single image, which is passed on to the layout recognition software. This is based in the Omnipage library, for which we have a license. The laptop accepts spoken commands and can read the text using speech synthesis.

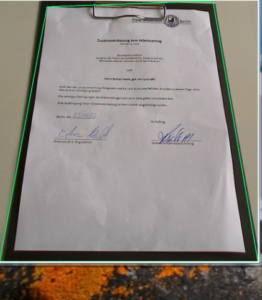

| A document captured by the portable camera. Several processing steps can be seen on the right. |

This project was a finalist in the 2006 “Bionics Ideas Contest” conducted by the Ministry of Education and Research. The new phase of the project is being funded by the DFG.

A video of the system has been uploaded to the Internet, the image below shows a screenshot of the video showing the working system.